The meta-analysis can be done using following models.

1. Fixed effect model

2. Random effects model

Selection of appropriate model depends upon the heterogeneity amongst the included studies. (see below for details)

Fixed effect meta-analysis: Steps

1. Calculating / extracting effect size = OR (Odds ratio) for each included studies.

OR = ad/bc

(where a = number of exposed in cases, b= number of non-exposed in cases, c= number of exposed in controls

, d= number of non-exposed in controls)

2. Calculating LogeOR for each included studies. (Log Odds Ratio base e)

3. Calculating Variance (V) and Standard Error of Log (OR)

Variance for ith study (Vi) = (1/a + 1/b + 1/c + 1/d)

SELog OR = sqrt(1/a + 1/b + 1/c + 1/d)

4. Calculating confidence intervals for Log (OR) and converting them to original scale for each study.

A. Confidence intervals for Log OR

LB CI = Log (OR) - Z1-α/2 * SE Log OR

UB CI = Log (OR) + Z1-α/2 * SE Log OR

B. Confidence intervals of OR

We can convert above confidence intervals into original scale by following formula.

OR = eLog OR

5. Weights (W) of each included studies under fixed effect model (inverse variance method)

Wi = 1/Vi

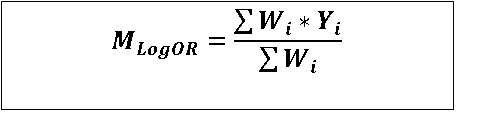

6. Summary Log OR (meta-analysis Log OR) under fixed effect model is calculated as follows

MALog OR = Summary Log OR

Wi= Weight for ith included study

Yi= Log OR for ith included study

7. Standard Error of MALog OR = 1/Σ Wi

Once summary Log OR and its standard error is calculated, it is fairly easy to calculate confidence intervals.

We can convert summary Log OR and its confidence intervals into original scale as described above under point 4(B).

8. Z value and p value.

Once summary Log OR and its standard error is calculated, it is fairly easy to calculate Z value and p value using normal distribution.

Z = MALog OR / Standard Error of MALog OR

If available data is Odds Ratio and its confidence interval, then log Odds Ratio is initially calculated.

SE of log Odds Ratio is calculated from UB and LB of confidence intervals, as follows.

SELog OR = (UBLog OR - Log OR) / Z1−α/2

It can be also calculated as follows

SELog OR = (Log OR - LBLog OR) / Z1−α/2

Variance (V) = SE2

Measures of heterogeneity

Not all included studies are homogeneous with respect to characteristics of participants such as age, gender, geo-environmental factors, other socio-demographic factors,

selection criteria for cases etc. When these differences are present, variability in the study results is not just random, and the

studies are considered as heterogeneous. This heterogeneity is quantified by following measures of heterogeneity.

1. Cochran's Q statistics

Q = Σ Wi * (Yi - M)2

Yi = Log OR for ith study

M = Summary Log OR (Meta-analysis Log OR)

Q statistics follows chi-square distribution with k - 1 degrees of freedom (k= number of included studies)

So,

p value for Cochran's Q statistics can easily be calculated using chi square distribution.

Significant p value indicates that significant heterogeneity is present, and we should consider other methods of

meta-analysis like random effects model or sub-group analysis or meta-regression.

2. Tau squared (τ2) statistics

τ2= (Q - (k -1)) / C

where,

C = Σ Wi - Σ Wi 2 / Σ Wi

Σ Wi = Sum total of weights of all included studies

Σ Wi2 = Sum total of squared weights of all included studies

3. I2 statistics

I2= 100 * (Q - (k -1)) / Q

I2 statistics is measured in percentage. I2 value of < 25% is considered as low heterogeneity,

between 25 and 50 it is moderate and above 50% it is significant heterogeneity.

All above measures of heterogeneity provides a quantified measure, but they can not identify the factors causing heterogeneity.

Random effects model

If fixed effect meta-analysis shows significant heterogeneity (by Cochran's Q or I2 as explained above), then random effects model is one way to deal

with heterogeneity.

In random effects model (DerSimonian‐Laird), weights of each study are revised as follows .

WRE = 1/ (V + τ2)

There are other methods used for random effects model such as maximum likelihood, restricted

maximum likelihood (REML), Paule‐Mandel, Knapp‐Hartung etc. However, DerSimonian‐Laird method is most widely used and robust.

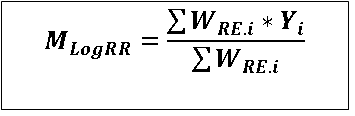

Then, summary Log OR (meta-analysis Log OR) under random effects model is calculated as follows

MLog OR = Summary Log OR

WRE.i= Revised weight for ith included study

Yi= Log OR for ith included study

Standard Error of MALog OR = 1/Σ WRE.i

Once summary Log OR and its standard error is calculated, it is fairly easy to calculate confidence intervals.

We can convert summary Log OR and its confidence intervals into original scale as described above under point 4(B).

Z value and p value.

Once summary Log OR and its standard error is calculated, it is fairly easy to calculate Z value and p value using normal distribution.

Z = MALog OR / Standard Error of MALog OR

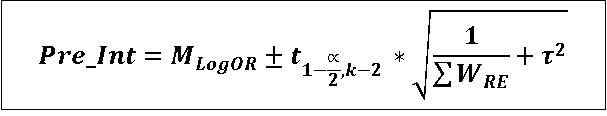

Prediction interval is calculated using following formula.

Where, t1-α/2, k-2 is (1−α/2)% percentile of t distribution with significance level α and k−2 degrees of freedom,

(k = number of studies included in the meta-analysis).

Interpretation: There is a 95% (or any other specified) probability that a newly conducted study will have effect size between this interval.

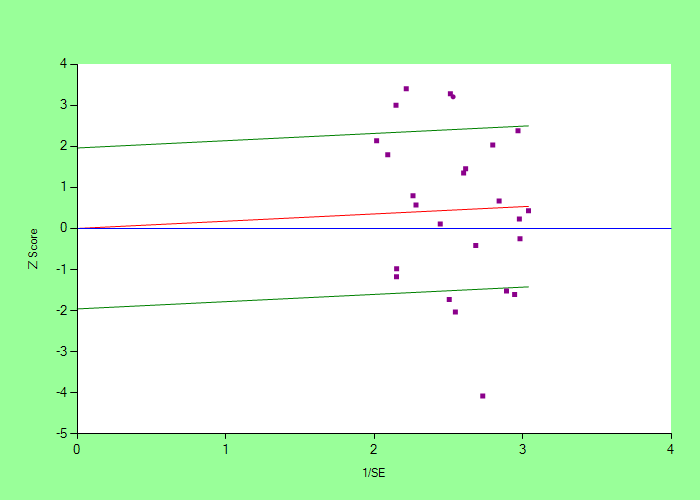

Galbraith Plot

The Galbraith plot can also provide graphical representation to assess heterogeneity in the included studies.

It is a scatter plot of Z value against precision (1/SE) of each included studies. (Z value of each study = Log OR / SELog OR).

The central horizontal blue line represents the line of null effect (Log OR = 0). Studies above this line has Log OR > 0 or (Odds Ratio > 1).

Studies below this line has Log OR < 0.

Middle red line represents the MA Log OR. Its slope is equal to the MA Log OR. Studies above this line has Log OR > MA Log OR. Studies below this line has Log OR < MA Log OR.

Two green line (above and below middle red line), represents the confidence intervals of MA Log OR.

In the absence of significant heterogeneity, we expect that about 95% (or other specified level) studies lie between the two green lines, and 5% (or other specified level) lie outside this. If more number of studies are

outside these lines, then it indicates significant heterogeneity.

In this Galbraith plot,

four studies are above the top green line and five studies are below bottom green line. So a total of 9 studies, out of 25 included studies are not within the zone bounded by two green lines, signifying heterogeneity.